Every online business consults an SEO. Understand the basics of SEO always helps. Here are the basic SEO Tips every webmaster should know

Every online business needs to consult an SEO at some point in time as a Webmaster understanding SEO will always be helpful. So let me share a complete list of Basic SEO tips that every webmaster should know.

Feel free to skip content as you may find it to be too basic for you.

- Links And Anchor Text

- Use of Robots.txt

- How to Contact Webmasters

- Keyword Research

- Check for Duplicate Content

- Use Traffic Analysis Tools Efficiently

- Google Operators

- Find nofollow links without HTML

- Understand Meta Tags

- Google Search Console

- Bing Webmaster Tools

- XML Sitemaps

- W3C Validator

- Google Alerts

- Canonical URLs

- Analyze Server Error Logs

- Analyze Site Performance

- Mobile SEO

- Server Header

- Wayback Machine

- FTC Guidelines

- WordPress

- Breadcrumb

- Ratings

- Other Rich Snippets

- Link Sculpting

- All Links from the Same Page aren’t Equal

- Deep Links

- Disavow tools

- Bad links

- Manual Spam Action

- Difference between site De-indexed and Site Penalized

- X-Robots-Tag

- Content Scrapers

- Optimize Use of Images

- Minify CSS and JavaScript

- Beautify CSS and JavaScript

- Negative SEO

- Related Content

- A/B Testing’s Impact on SEO

- Multivariate Testing

- HTTP Status Codes

1. Links And Anchor Text

Link building has been the backbone of SEO for years, and in moving forward, it is still the biggest ranking signal in Google.

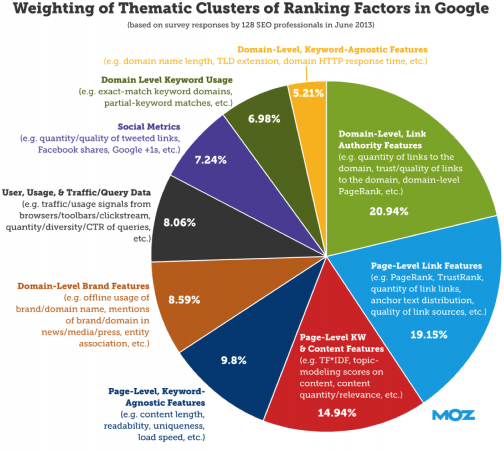

128 SEO Professional Survey by MOZ indicates link building is approximately 40% of all the SEO factors.

Link building has evolved a lot over time. 2008ish time you could easily outrank other sites with link exchanges. In 2010 you could outrank sites with links from blog networks. In 2013, you can win with guest posts or press releases for links. Guest posts for the sake of only links are out of fashion as well.

The steps by Google are not to make link building less critical, but it is to build links even more important because Google is not decreasing the value of links in its algorithm. It is making its algorithm such that links for the sake of links have lesser value in its algorithm.

Finding ways to build links that look more natural than a link building process is the future of link building.

2. Use of Robots.txt

Its robots.txt and not robot.txt, which is where many webmasters goof-up. Robots.txt was used to block search engine bots from accessing part (or whole) of a website. As it is generally used for preventing search engines to sites, very few SEO experts make full use of it, thinking more pages in Google index from the domain is better, but it is entirely false.

If Google finds tons of pages on your site with very thin content (or links to pages available to only members), it may not de-value other valuable content assuming the site has thin content more.

Ideally, robots.txt should block pages of the site that may not bring traffic to the website from search engines. Some of the example pages that should be blocked are

- CGI-bin

- Images directories

- JavaScript and CSS File Directories

- Site Admin Area or Moderator Area

- Site Search

- Member Profile Pages

- User Control Panel Pages

The above list varies depending on the type of site. As an example, a social networking site may not want to block member profile pages because they may be targeting the member name as the keyword in search engines. Similarly, an image-hosting site may not prevent the site search option because those are content pages for that kind of website.

Nowadays, there is a lot more than just blocking content with robots.txt. The option to specify the XML Sitemap files in your robots.txt file is a recent addition to the content one can add in robots.txt file.

3. How to Contact Webmasters

Every real website has an option to get in touch with the webmaster or the site owner. Be it Mark Zuckerberg for Facebook or Larry Page for Google or any other website. The point is to understand who you should be contacting and through what medium. If you only use the whois email to get in touch with the webmaster, you may land up on the wrong person’s inbox, and your email is ignored and gets deleted.

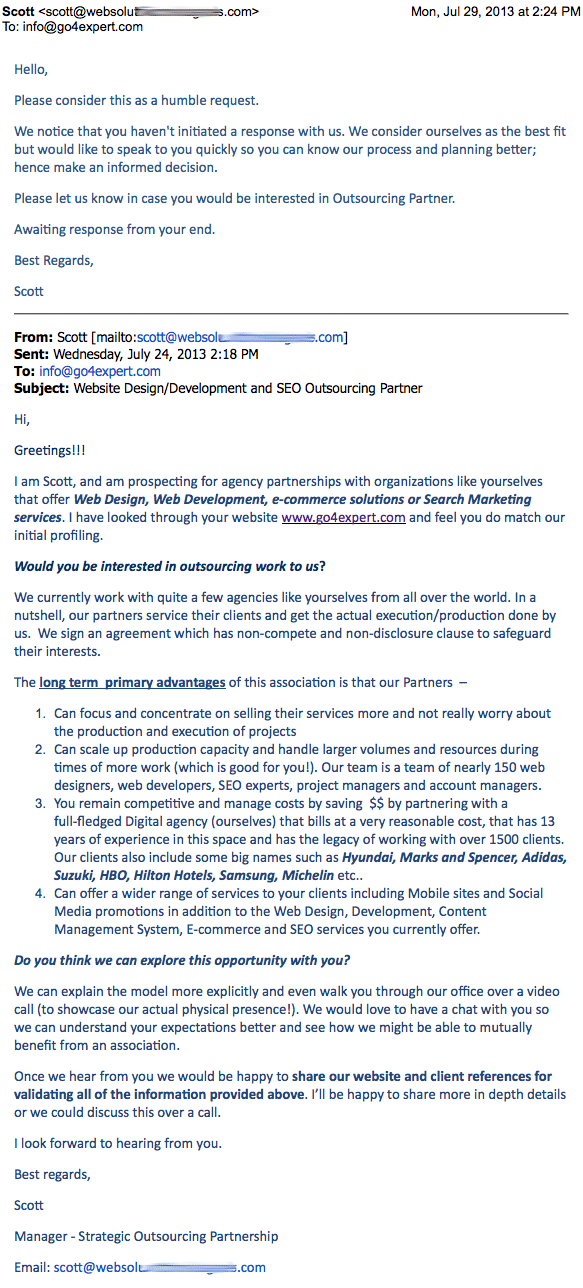

I often get a lot of SEO proposal in my inbox that looks pretty similar to this one.

There is no way that this email is genuine because my contact page on Go4Expert or any other page on my site does not have that email displayed publicly to be used. So either they used one of the forum emails to grab my email address or the whois information to get hold of that email and send me those emails. In either case, I can safely delete that email or report as spam.

4. Keyword Research

Keyword research is the fundamental building block for SEO (as well as PPC Marketing). If you don’t use the right keywords that people are searching for, you may be wasting a lot of your SEO efforts.

Check out the complete guide to keyword research.

Keywords research with the wrong tools can lead to drastically bad results for SEO. If you are using Google Adwords Keywords Research tool for SEO, check out Why I don’t Recommend Google’s keyword tool for SEO.

5. Check for Duplicate Content

Copyscape is a free tool that allows you to check any site for duplicate content. The free tools allow only five free scans daily per domain, and you can only check live pages published. However, if you are outsourcing the content or you have a writer write content for you, it makes complete sense to use the pro services to check the content before making it live on your website to avoid being penalized for duplicate content.

Apart from Copyscape, Grammarly also offers checking for the Plagiarism in the pro version. I prefer and use Grammarly, and you can check out my review of Grammarly, which not only helps check duplicate content but also fixes every grammatical error in the content.

6. Use Traffic Analysis Tools Efficiently

Every webmaster should be able to use Google Analytics as well as other Analytics tools. Here is a list of traffic analysis tools to track the right information for their website and business as a whole. These tools not only help track website visitors but a lot more than that.

- Uptime tracking

- User behavior tracking

- Site Structure and Navigation

- Error tracking

And so much more. There is a lot one can track. However, as a website, it is essential to understand what you should be monitoring.

7. Google Operators

There are hundreds of Google Operators that can be used to make the life of SEO easier by allowing search results to be well-targeted. Here is a complete list of Google Operators.

As an example, if you wish to search all the SEO blogs that accept guest posts, you can search in Google: SEO blog intitle:”guest post”. Using the intitle Google operators makes webmasters’ life a lot easier.

8. Browser plugins to show nofollow links

When doing SEO, human errors can always creep in when adding a nofollow to link as there is no visual difference in a do-follow and nofollow link. Having a plugin that can show nofollow links differently than other types of links can help. It not only enables you to make the needed links as nofollow but can also help you find links that you don’t want to be nofollow, but you have added it accidentally.

There are quite a few Firefox Plugins, but I prefer using the ChromEdit Plus option, as suggested by Matt Cutts for a reason. I can edit the CSS as I want. The reason I do it is having a single-pixel border to image links with nofollow attribute breaks the site design.

9. Understand Meta Tags

Meta tags are not only confined to keywords and descriptions of a page. There are a lot of other meta tags that can make or break the site’s SEO. Meta tags like noindex, nofollow (This is different from the link’s relationship of NOFOLLOW attribute, aka rel=”nofollow” which only prevents search engines from following the individual link.), noodp, noydir, etc.

The Syntax for robots meta tag is

<meta name="robots" content="nofollow"/>

- noindex – Prevents page from being indexed.

- nofollow – Prevents search engines from following any links on the page.

- noarchive – Prevents a cached copy of a page.

- noodp – Blocks Open Directory Project description being used as search result description.

- noydir – Blocks Yahoo Directory Titles & Descriptions being used in Yahoo search results.

Moreover, to make the site responsive, one has to specify viewport as meta tags. So there is a lot to meta tags than just description and keywords.

10. Google Search Console

Google search console provides a lot of reports to help improve the site’s HTML and reduce errors. The couple of reports that I often use are

- Performance Reports – Under the performance reports, we have an Average Position for keywords that I like to revisit regularly to find out keywords where I am not ranking very high. As an example, keywords with an average position of more than 10 are an opportunity. Improving content always helps me rank well. Moreover, Google shares search terms instead of keywords. So we know what users are searching for in Google and coming to the website.

- Enhancements Reports – Helps with AMP errors, mobile usability, site speed, and so much more. It is one of those areas of the search console that helps iron out any issues the site may have, including the not found pages.

Moreover, there are many other reports, including rich snippets, external and internal linking to help on the SEO as a webmaster. Playing around in the Google search console is one of the best tips one can give to a webmaster for SEO.

11. Bing Webmaster Tools

Bing Webmaster tool has some cool SEO tools that aren’t even part of Google search console. If you remember, Bing pioneered the link Disavow tool, and it is then Google joined the race. Bing is working on tools to help out Webmasters to SEO currently.

Some of the Bing tools that I love are

- Bing Link Explorer Tool to help you analyze links to any site (read competitor) that you don’t own.

- SEO Analyzer to Analyze on Page SEO Elements that you may have missed.

12. Submit XML Sitemaps to Search Engines

Every CMS has an option to generate an XML Based Sitemap either through a plugin or as a part of the core functionality and also can notify search engines like Google / Bing about the change in the sitemap.

Note: The notification to Google about change in sitemap is different from submitting the sitemap in your Webmaster account.

Once you add the XML sitemap in your Google / Bing Webmaster’s account, you can view lot more details about the index status of the URLs as well any error or warnings in the sitemap.

13. W3C Validator

HTML is a markup language that means you can have wrong or missing HTML tags and still have the same layout as you intend to.

An example could be you have an extra open DIV that you missed to close it at the end, or it could be that you have an extra closing DIV tag at the end of your page that does not impact the layout of the content in browser but still, this is an error that needs to be fixed.

To make your HTML error-free W3C HTML Validator is a handy tool that every webmaster should be aware of. Even if you are not a developer, it is essential to see if your developer hasn’t missed anything when constructing the final HTML.

14. Google Alerts

Google Alerts is a service by Google to get email notifications for search terms you wish to monitor for Google search results. The ideal use of Google alerts keeping an eye on your brand name or competitors’ brand name for being mentioned in blogs, forums, or anywhere on the internet.

Let us take a simple example. I want to know who all are saying what about my site BizTips. So I can create a Google alert for the following terms

BizTips -site:biztips.co

Anybody mentioning my website name BizTips apart from my site will trigger the alert. So any website, blog, or forum on the internet when they mention my site I am notified by Google.

I prefer to have the frequency of email as it happens, but you can also opt for once a day or even once a week.

You can do the same for your competitor’s name as well. 🙂 All you have to do is create a Google alert for your competitor’s name instead of yours.

15. Canonical URLs

You can have multiple URLs for the same content. Out of each of those url, the canonical URL is the preferred version.

An example of canonical url is – if you have product listing with sorting orders, then a page can display results sorted alphabetically or based on other factors.

http://www.example.com/product-type-1.php?sort=alphabetical

Or

http://www.example.com/product-type-1.php?sort=others

The sort parameter changes the content of the page very slightly, making it almost the same content on multiple URLs. So you need to add rel=”canonical” link to the <head> section of the product page like this

<link rel="canonical" href="http://www.example.com/product-type-1.php" />

It instructs the search engines that the url of the page is different, but the content is the same and is a canonical version of the URL.

16. Analyze Server Error Logs

SEO is not only confined to HTML and Web Development, but it also includes the ability to analyze server error logs for 404 not found errors or Internal Server Errors or any other error.

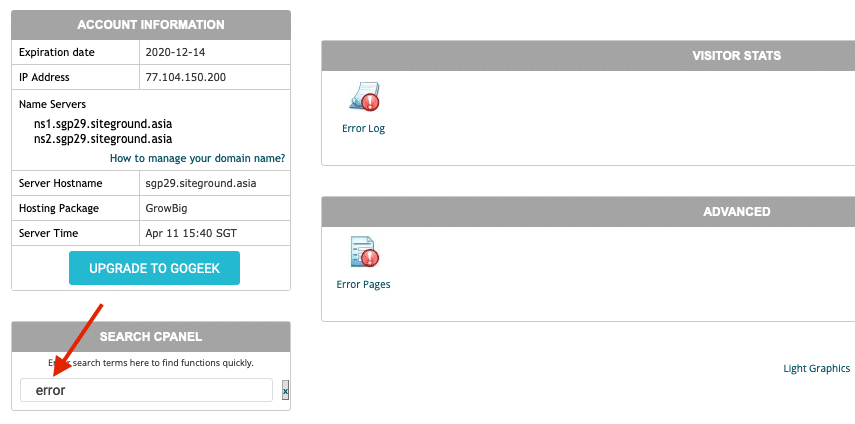

In CPanel on SiteGround (my preferred host to start a blog), you can find the Error logs under Visitors Stats.

Search for error in the Search CPANEL box.

17. Analyze Site Performance

There are quite a few tools and plugins to help you analyze the site’s speed and performance.

- Google Page Speed Insight as Web Interface or Browser Plugin,

- YSLOW plugin by yslow.org,

- GTMetrx – A tool that uses Google’s Page Speed, YSlow, as well as other tools to help you analyze pages for performance along with suggestions that are very easy to understand and implement.

- WebPageTest – Helps you visually compare sites or pages and provide content breakdown reports that help analyze which type of content, aka images, JavaScript, Flash Objects, take the most time.

Bonus Tip: There are page timing reports in Google Analytics under Content > Site Speed, which shows the average page load time for users from initiation of the pageview (e.g., click on a page link) to complete loading of the page in the browser.

18. Mobile SEO

Smartphone users are growing exponentially not only in India but worldwide. Google has also taken the initiative of Mobile-first indexing and SEO i.e., to remove sites from mobile search results that are not mobile-friendly or too slow on mobile devices.

Making your site only mobile-friendly is not enough anymore. The webmaster needs to make sure the site loads very fast for the mobile-user as well. If your website on mobile devices just hides a few elements to make it responsive design, it may be a workaround but not a good idea in the long run.

19. Check Server Header

There are multiple ways of redirecting an old page (or url) to a new page (or url).

- Meta Redirect

- Temporary 302 Header Redirect

- Permanent 301 Header Redirect

Google recommends a 301 permanent redirect, which means your server header should contain the right header code of “301 Moved Permanently”.

As an end-user, all the redirects will behave the same way. However, to make a permanent 301 redirects for Google as well as for user, you need to be able to check server header response to be sure about your headers sending the right header code.

Similarly, one can delete a page, and it should be a 404 not found. Ideally, the server status code should be 410 content delete.

Use DNSChecker Server Header Checkup Tool to find and verify if your site has the right server headers.

20. Wayback Machine

Wayback Machine creates an archive of web pages that can be referred to in the future.

In SEO these days, it is a prevalent statement – Things were working fine until.

It is also ubiquitous that the client hired someone to do SEO for his or her site, and suddenly a Google Update hit them.

To view the content of the site as it was in some previous date, Wayback Machine is the right tool.

It helps understand what On-Page SEO was done (or not done for so long) on the site that made SEO traffic to drop.

21. FTC Guidelines

Federal Trade Commission or FTC has made it mandatory to disclose promotional or paid content as well as affiliate links, so are aware of the Complete FTC Disclosure Guidelines.

22. WordPress

WordPress for SEO is almost unavoidable. No matter what type of site you have, somewhere, you will find a connection to WordPress. A typical 5-page website for a company, an eCommerce site, or a blog can all be in WordPress.

WordPress is unavoidable. Moreover, WordPress has so much to offer in SEO as well. So it makes complete sense to know about the basic working of WordPress.

23. Breadcrumb

Breadcrumbs in web design refer to the navigational links which allow users to keep track of their locations on a website. A simple example of breadcrumb can be

Site Home > Section > Category > Sub-category

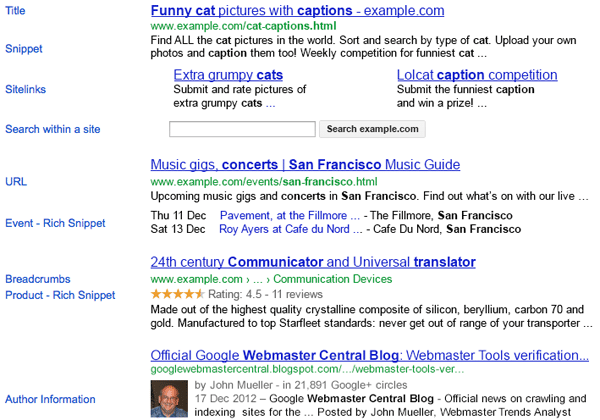

Google shows a lot of information on the search engine’s results page, and one such information is a breadcrumb that any kind of site can use. The same may not be true for other such rich snippets like search a site or event information etc.

For WordPress, Yoast SEO has breadcrumbs. However, there are standalone breadcrumb plugins like Breadcrumb NavXT.

24. Ratings

There is a misconception for rating rich snippets. The mistake is that Google displays the rating of the content of the site. However, the reality is it shows the rating of the product being reviewed in the content and not content rating.

As an example, if I have reviewed a car on my blog, then rating in Google’s search result will show a rating for the car and not the rating for the article. So rating rich snippet is for product or review related sites or pages where you can display a rating of the product in Google search result itself.

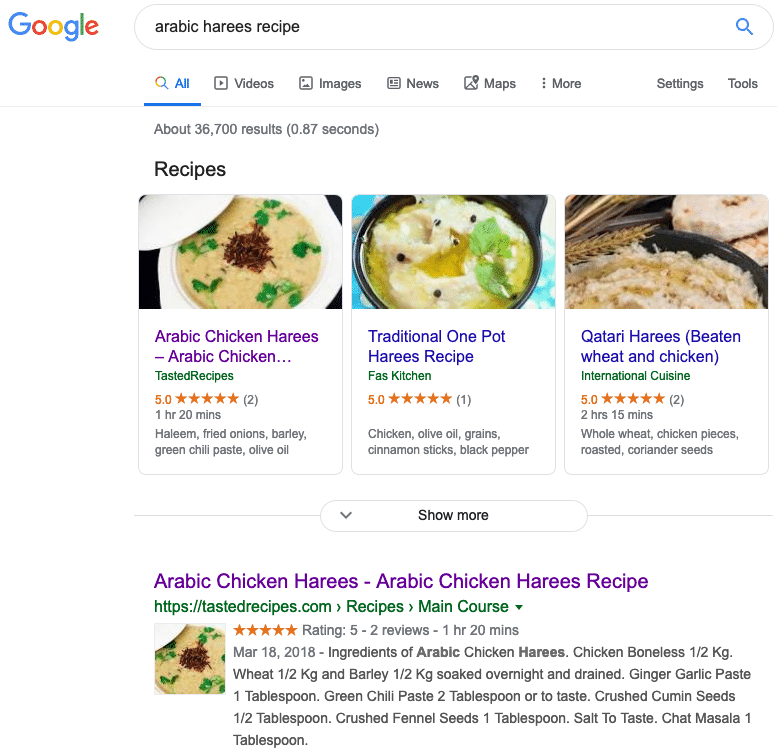

25. Other Rich Snippets

Like ratings, there are quite a few rich snippets that a webmaster can use depending on the type of site. Here is a Recipe Data from my site TastedRecipes.com

If a website can have any structure date, make sure you add them. Use Google’s Structured Data Testing and Analyzing tool for testing.

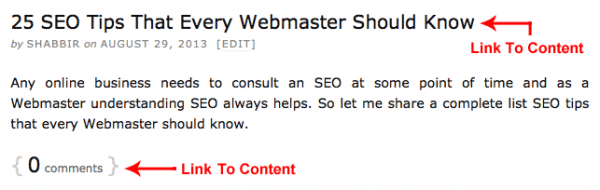

26. Link Sculpting

All the links from the home page (or critical pages as the case may be) may not be of the same value, So it makes more sense if you allow your relevant pages to pass maximum link juice by making other links as nofollow.

A simple example can be a blog’s home page, which can link to the articles in multiple ways.

The links that are not keyword-rich like a Read More link or comment link can be made nofollow to pass more link juice from home page to the article page with keyword-rich anchor text.

Note: Privacy links, Terms of Services links, Contact Us Page Links should all be nofollow.

27. All Links from the Same Page are Not Equal

Before 2005-ish time a link from a high PR page mattered the most. However, link building has evolved. So in 2005+ time, links from high PR relevant pages mattered the most.

Slowly but surely, as Google tries to fight link spam, link building has evolved a lot more, and what matters the most now is not the only link from a high-quality, relevant page but also the placement of the link.

A high-quality page linking to a site from a content area has a lot more impact than the same page linking to the same site from the sidebar, header, or footer area of the page.

28. Deep Links

Deep-links are links to inner pages for a website and other than the home page. There are reasons for deep links, but the most important reason is – It enhances the users’ experience by sending them directly to the right page of users’ interest. Apart from that, it comes with a lot of other benefits like

- Deep Links Boost Authority of the Entire Site – Every internal page of your site link backs to the home page (Either In navigational menu or at least in the logo). So an external link to an internal page contributes to the overall authority of the domain. However, if the same external link points to the home page, it may not add so much value to all the internal pages.

- Variation of Anchor Text May does not Help Rank the Home Page for all the inner pages terms – Neither you can, nor you want to be ranking your home page for all the keywords for your site. Ideally, we create different pages that can contain keywords in title, meta, url as well as in a body that will help search engines crawl those internal pages and rank them for those terms. So it makes much more sense to be building links to those pages with anchor text related to crucial terms than getting links to a home page with those terms.

29. Disavow tools

The Disavow tool helps you submit a list of sites or pages that you want your link to be removed, but you can’t get it removed despite all the efforts.

Disavow tools don’t remove the links for you. However, it lets the search engine know that you would like it to be ignored.

Note that the Disavow tool is not a cure for all bad links, so ideally, you should remove the bad links. If you can’t get those removed after all your efforts, it’s then only you should opt for a Disavow tool.

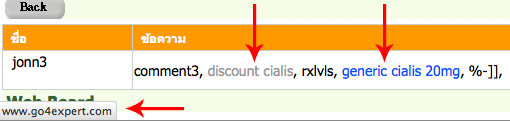

30. Bad links

Bad links can have an impact on your site so much that Google can take your pages out of their search index. So it is essential to know links that are bad for your website and try to get them removed or disavow them. Some of the bad links as per Google include

- Paid Links

- Excessive Reciprocal Links

- Link Schemes and Link wheels.

- Blog Networks

- Sitewide do-follow Links

Any other link meant to game the search engine’s ranking is a bad link.

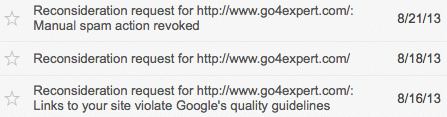

31. Manual Spam Action

Manual spam action on a site is not an end of life.

One school of thought is if you take action on manual spam on your site, you confirm the spam, but that is not what I believe in.

I saw a manual spam action notice in August 2013 for Go4Expert.com and took the needed action and then filed for a reconsideration request.

Within a few days, the manual spam action was revoked. In my opinion, manual spam action is a good sign because you have been told what you need to be doing to get the action revoked.

32. Difference between site De-indexed and Site Penalized

If a site is penalized for some action (unnatural links, thin content, the website being slow), there are chances that it can be recovered from the penalty if the reason for the penalty is taking care of.

However, if the site is de-indexed by Google, it’s nearly impossible to get it back. It means the site was penalized for an elongated period, and the cause of the penalty was not worked upon. Slowly but surely, the site was de-indexed by Google. It is the ultimate penalty that any site can get, and it possibly means the end of life for that site and the domain.

33. X-Robots-Tag

All the content on website may not be in HTML (content like XML, XHTML, RSS …) but meta tags (<meta name="robots" content="nofollow" />) are confined to only HTML content.

The X-Robots-Tag can be used in an HTTP header response for any given URL to block the robots. Any directive for robots meta tag is also valid for X-Robots-Tag.

As a webmaster, reading basic SEO tips, it is essential to know how to nofollow a CSS file. You may not technically add that header tags, but knowing it is possible can mean one can hire a person to get things done.

You can read about X-Robots-Tag here if you are curious.

34. Content Scrapers

Content scrapers are sites that steal content from other sites without permission and post them on their website. They use automated software to fetch content from RSS feeds or parsing web page’s HTML to extract the content of the article.

You can take the needed action based on the way content is being scraped from your site. If you see a lot of manual copy-paste spam, you can add the link to your article when anyone tries to copy the content. If content scrapers are using your site’s RSS, you can add a link to your website in the RSS feed for your site.

Again, because they are scraping your content, getting a link from them can even be considered as bad as well. Letting them just copy isn’t a good idea either.

Google is smart enough to know the original author of the content and the copy.

35. Optimize Images

Image optimization can be an essential part of building a successful website, and here are a few things that you should follow when using images in your web pages.

- Name the Images Correctly – You can always use the name that your camera uses for images like DSCN1415.jpg. However, it’s recommended to be naming the image files with the content of the image, which in turn can help you rank well in Image searches for related terms.

- Always have an Alt Tags for Images – What if for some reason the image could not be loaded in the user browser? Ideally, you want them to be seeing something that makes sense as an alternative to an image. So not only it makes sense to be having an Alt tag for pictures but a descriptive alt tag.

- Use Exact Dimension Image – Loading a larger image in a smaller image area means your user needs to be downloading a lot of more data than needed and showing a stretched image may lead to bad user experience. In either case, loading the exact size image is a solution.

- Use file type with minimum file size – All the images may not be of a minimum size in one of the file types aka JPG or GIF or PNG. Opt for the one that has a minimum size for your particular image.

- Use NextGen Image Format – WEBP is a new image format that drastically reduces the size of the image without degrading the quality of the picture.

For my WordPress site, I use Imagify to compress, optimize, and generate WEBP version of the image. However, there are other tools like TinyPNG.com.

36 & 37. Minify and Beautify CSS and JavaScript

To minify or beautify CSS, JavaScript code manually, I use the following tools to get the job done.

38. Negative SEO

SEO is a zero-sum game. It means you will gain in ranking for a keyword only when someone else loses its position for that keyword.

I have seen tons of links to my site from Russian domains with spam keywords.

A case of Negative SEO. I was severely impacted by it. I had to get those links removed as well as Disavow those links. The Manual Spam Action Request that you see was for these links only.

Negative SEO exists. So always keep an eye on how and where you are gaining links.

39. Related Content

You may not be able to link to all the relevant articles of your site within the content. So at the end of the article, one can give readers an option to follow along with similar content. It not only helps your user get engaged but also helps in building deep links to associated articles on your site.

A complete win-win for everyone – Reader because he has more to explore, the webmaster who has more reader engagement and page views, as well as Google who wants to make sure searcher gets what they are looking for on the site.

40. A/B Testing With No Impact on SEO

A/B testing is randomized experiments that have two variations, A and B, to identify elements in the experiment that maximizes performance metrics. For making an A/B testing, you have to have multiple variations of the same page with very nominal changes in each other, and so you need to take care of a few things.

- Variation Page A does not have a 301 permanent redirect but a 302 temporary redirect to Page B. If you can, you should not be redirecting Google user agent and serve them Page A always.

- Variation page B does not get indexed in Google, or else you may have duplicate content issues. So apply the meta tag “No Index” to page B and also specify the canonical url to page A in the head of page B.

- Once the experiment is over, have a 301 permanent redirect from page B to page A just to be on the safe side.

41. Multivariate Testing

A/B Testing requires A and B versions of the page for performing the test. Still, multivariate testing can be carried out by dynamically changing part of a webpage to display different content variations to visitors in equal proportion.

So multivariate testing does not have to impact the SEO from the URL’s point of view. However, one needs to be careful about changing the key elements of the page, like the title of the page or content within the Hx element. Google may see a change in keywords in critical areas of the site.

Google encourages A/B testing as well as Multivariate testing and provides guidelines for testing to get more visitors and conversions on your website. It’s just you have to follow the instructions.

42. HTTP Status Codes

Here are some commonly used HTTP header server codes

- 200 OK/Success

- 301 Moved Permanently

- 302 Temporary Redirect

- 404 Not Found

- 410 Content Removed

- 500 Internal Server Error

- 503 Unavailable or timeout

The complete list is here

Finally, SEO is an On-Going Task

SEO is ever-evolving and keeping yourself updated. Reading some 40 tips is not everything. You should be reading the best search engine blogs. Here is the complete list of great SEO blogs to follow. At times focus may change from one aspect of SEO (like link building) to other elements of SEO (maybe site speed or long form of content).

Finally, if I have to share the best SEO tips for a webmaster, it has to be – Don’t Try to Game the Search Engines, Play with them.